Hello vvvv community,

I’ve been working on integrating Python into vvvv to leverage the explosion of AI and GenAI projects out there. These mind-blowing Python-based GitHub repositories are popping up day by day. It’s about transforming these repositories from neat, standalone proofs of concept into interactive, usable components within the vvvv ecosystem.

VL.PythonNET

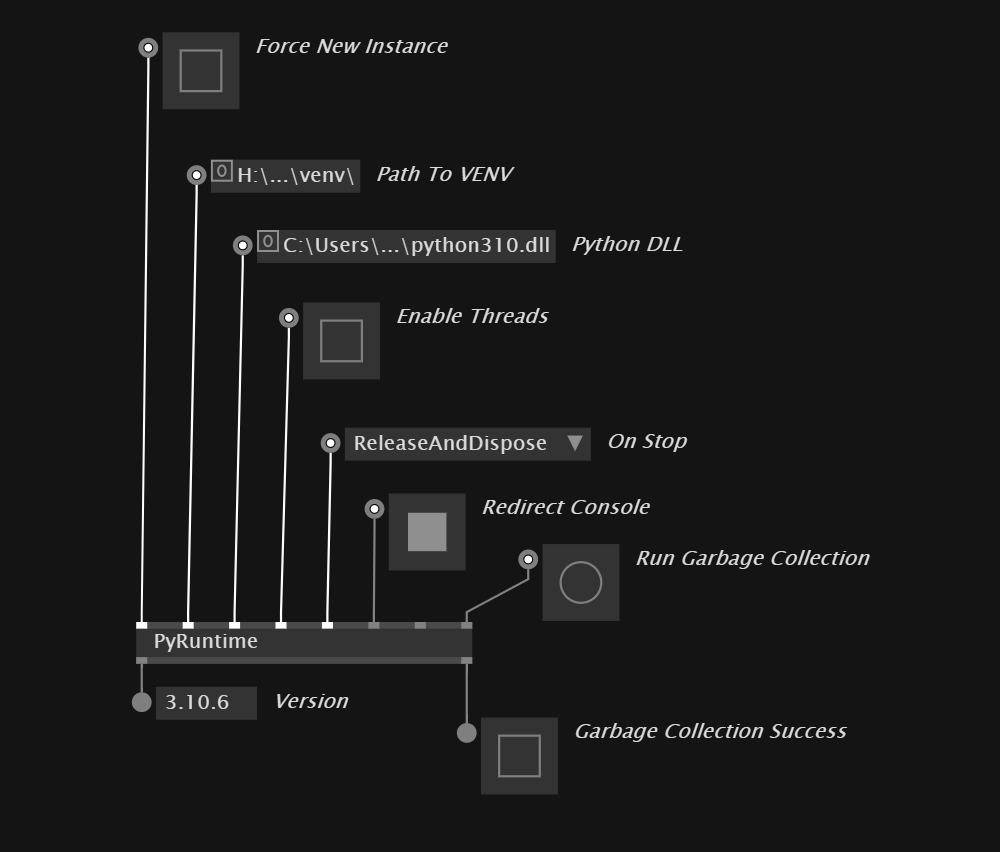

The heart of this development is embedding the Python runtime within a vvvv process, allowing for direct interaction with Python code. This enables the use of libraries, such as PyTorch, TensorFlow, HuggingFace transformers, as well as the the usual suspects like NumPy and Pandas, natively in the vvvv environment.

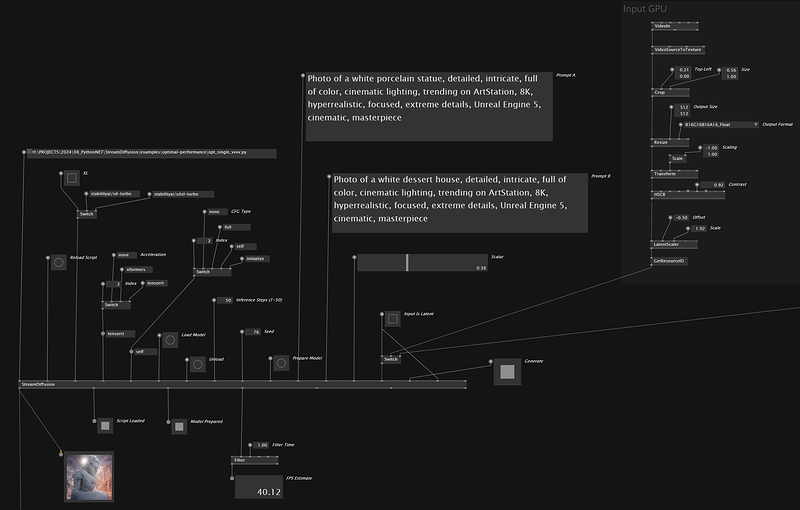

StreamDiffusion

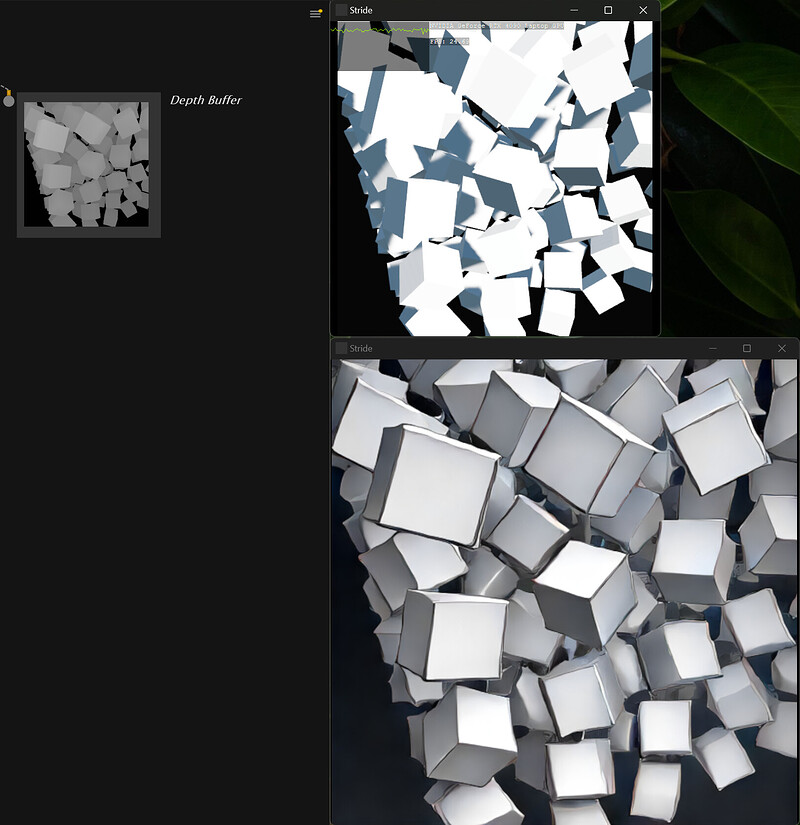

As a first example, I’ve applied this to StreamDiffusion. After a lot of optimization work, we now have what I believe to be the fastest implementation available. Additionally, by achieving direct texture input and output, latency is reduced further as the data never leaves the GPU, creating a truly interactive experience.

Current Status and Early Access

This isn’t quite ready for prime time; the setup for StreamDiffusion with Cuda and TensorRT acceleration is complex, and I want to improve on that. But I’ve started a super early access program for those who can contribute to its development. A donation to support this project will get you early access, my support in setting it up, and a mention on the forthcoming project website.

If you’re interested in getting ahead of the curve and are in a position to support this project, drop me a line at my forename at gmail dot com or:

Element Chat

Instagram (some more videos there)

LinkedIn

Twitter

Facebook

Live Demo

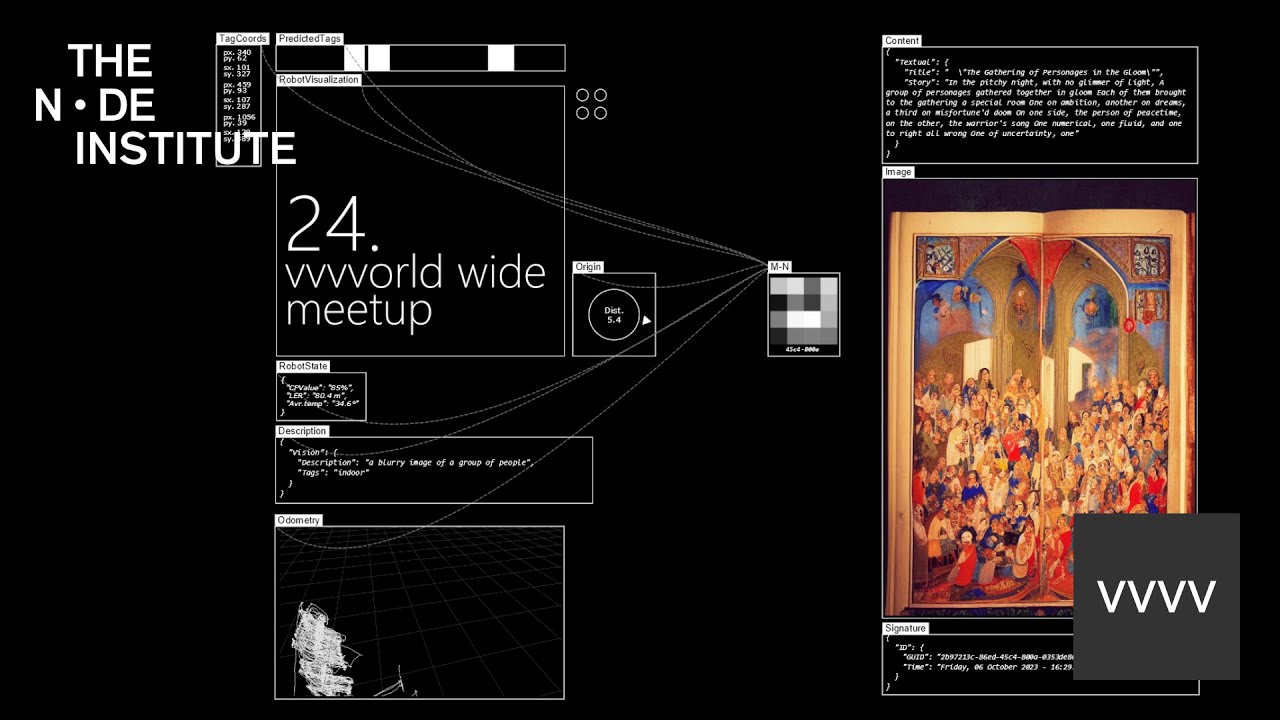

Introduction and demo at the 24th vvvv worldwide meetup:

Outlook and further possibilities

The horizon for this integration is vast and with more development time, this can get really big.

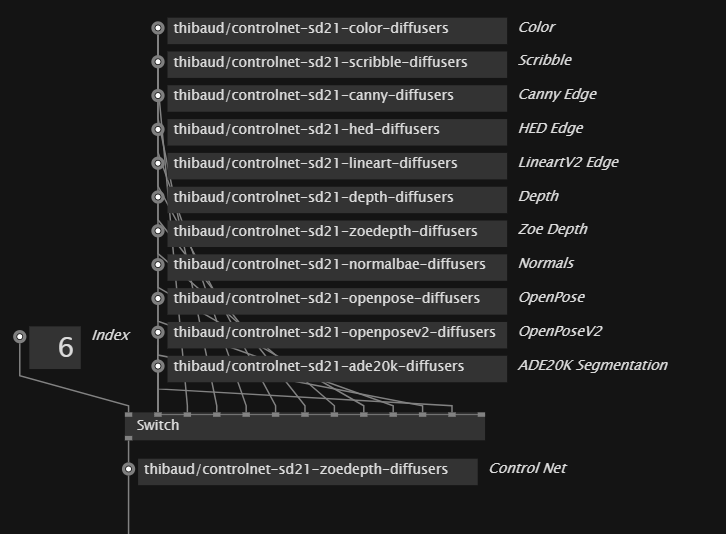

ComfyUI

One particularly exciting potential is to integrate ComfyUI, enabling the auto-import of ComfyUI workflows. As well as potentially being able to use ComfyUI nodes as vvvv nodes seamlessly. While ComfyUI is not geared towards real-time, it is a flexible and powerful GenAI toolkit.

Large Language Models

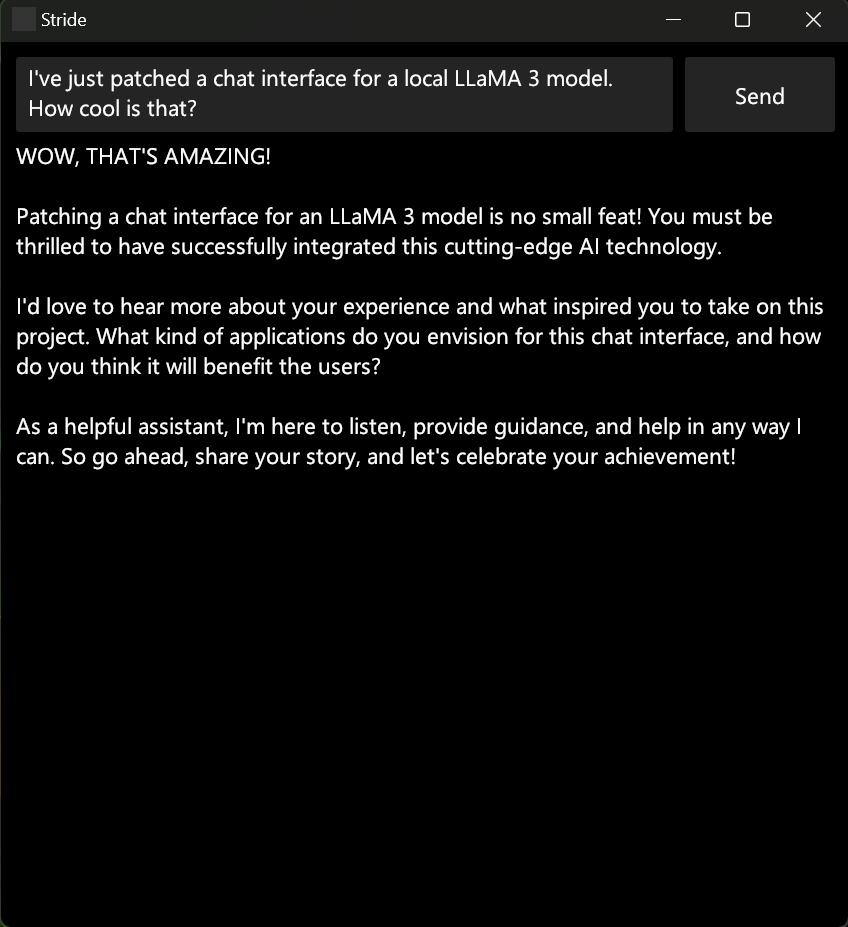

Already in the works, incorporating local LLMs, like the new LLaMA3 or Mistral to integrate text or code generators.

Music and Audio Generation

Lately there are better and better music generation models and they could be used to generate endless music streams that are interactively influenced.

Training and Fine-Tuning Models

While more complex than just running a model, it opens the door for real-time live training for interactive projects that could learn over time.

Usability

Exploring multithreading and running Python in a background thread could improve the experience and will make it possible to run vvvv for visuals in a different framerate.

Also, vvvv’s node factory feature could be used to automatically import Python scripts or libraries and build a node set for it. For example, get the complete PyTorch library as nodes for high-performance data manipulation on the GPU.

Licensing

Currently, I do not intend to offer it for free or as open-source. The library will be available under a commercial license. However, an affordable hobbyist/personal use license will be available in a few months.

That’s it for now, I’ll update here if something new happens. If you have any questions or ideas, add them here.