After some discussion on the model proposed in my previous post the decision was to stick with our original plan, so per-stream specific nodes have returned and are now available in both standard and reactive versions, the same is true for skeleton.

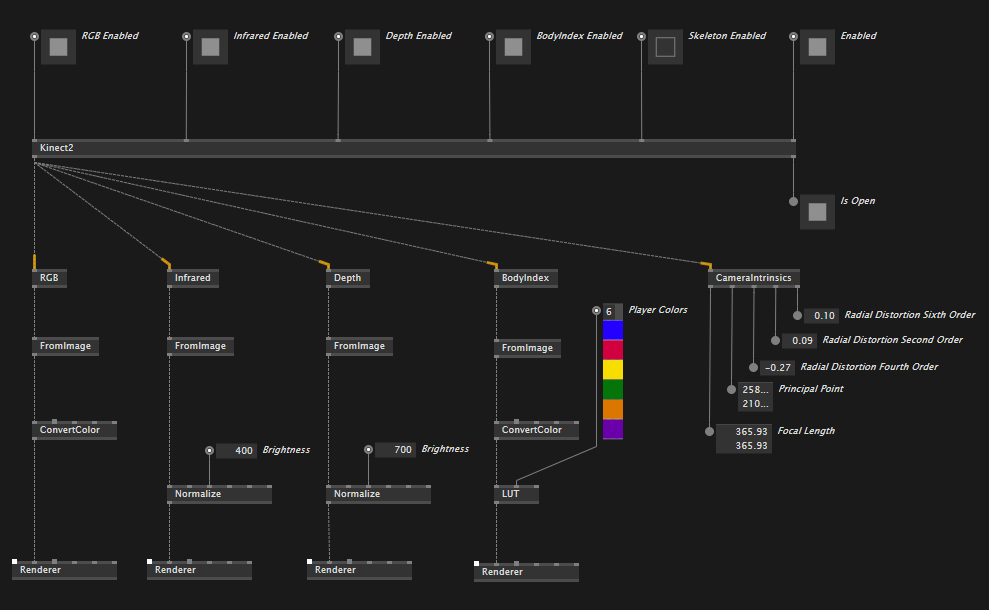

This is what the Overview help patch looks like as of version 0.1.13-alpha:

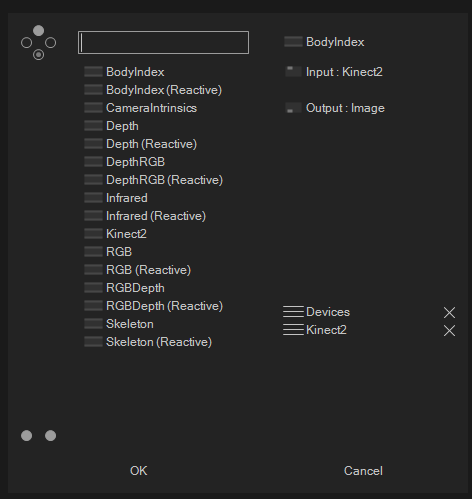

An here is the current nodeset:

Go ahead and give it a spin, you can intall via nuget following the instructions here.

We have added help files with an overview, a Skeleton usage example, and a Skia Depth PointCloud example as well. Make sure to check the examples out at:

[Documents]\vvvv\gamma-preview\Packages\VL.Devices.Kinect2\help\Kinect2\General\

Cheers!