@sebl I’ll lend these 2c to you… Just remember it’s my 2c, so remember to give them back.

i totally absolutely 100% agree with the posts of eno and ludnny.

since i can not add more facts i just wanted to post my YES :

yes, eno youre right.

please devs dont forget about vvvv

vl will not replace vvvv, and when i learn new Software, it will not be vl, it will be more userfriendly stuff like unreal,…

but

dear devs, believe me, vvvv user base is still expanding and especially in my Job i often see how new users learn vvvv because ist the easiest tool to solve ar/vr design problems. they will never learn vl because they are ui designer not (so much) developer, vl is not intuitive fot them.

so pls dont stop making vvvv better

I feel you @Eno.

Large patch performance is an issue. Lots of switches and working with the evaluate pin, but a lot of times its not possible to achieve the desired result, as you dont want to completely disable something, but just give it less priority. Also some way of using the power of multiple cores would be great.

I am already using VL in a small (put performance hungry) part of the project. Now there arent really any major single bottlenecks, but the sheer size of the project adds up to a requirement for lots of CPU and GPU Power. With Superphong enabled, I need a GTX1070 and i5-7600k to get decent performance (around 45fps@1080p), but on anything less its running rather slow.

Even though I have some basic coding skills I always felt that vvvv had just the right amount of complexity to be understood by non-coders and create even larger projects. So I share the sentiment, that I would really love to see some improvements to vvvv or a roadmap thereof as well.

I think one of those little niggles that I always wanted to have is zooming in patches like in VL. It cant be very hard as it also works in the finder since forever. Dont understand why this useful UI enhancement hasn’t made it into vvvv after so many years, but is in VL from the start, as I can see no single down-side to it, but only upsides. Also S+R sorting/searching as eno suggest, so I dont have to scroll through the 200 or so send strings to find one - right now they are vaguely sorted by time created, but some just appear in random places. I think some simple UI/UX improvements would be a good start to let non-VL users feel like there are improvements coming to vvvv, which are not purely “technical” and get a new generation of users excited for this incredible tool, just as you have done with the refreshed look and feel of Node17.

Happy to assist in any way I can.

@seltzdesign

the vvvv UI is based on addflow, if i‘m not mistaken. the finder uses something else. that‘s why there are differences.

this comes down to the old discussion why not iterate vvvv. replace the UI, then replace the delphi core and nodes and after that maybe think about new language features. but this should have happend years ago, maybe right after the introduction of c# plugins.

devs decided to go a different route as we know.

not sure what would be the solution right now. VL is not ready to replace vvvv. and vvvv is not getting enough dev time due to VL not being ready.

@u7angel thanks for clarification. That makes sense. Oh well, thats just how it goes I guess.

Lets see what the future holds. I guess vvvv will always be there as it is today, but more and more things will make it to VL. VL actually also has the zoom feature, even though I think the VL UI is not there yet - too confusing and inconsistent to my liking. I get how vvvv and windows and inspektor work, but I just dont “get” the VL UI yet. Trying to make everything totally minimal, always leads to compromises down the road, when things get more complex and minimal quickly turns into complicated. I have the comparison to Grasshopper, which I use a lot and which is sort of on the other end of the spectrum UI-wise, where everything is really graphical and I have to say its much more fun to use. I guess even though I dont like Max/MSP so much, I think their approach to GUI and how they mix the programming and presentation version of windows is pretty much a perfect balance for me and how I would love vvvv to look.

I’m really glad that after an initial silence, this discussion is picking up among pro users, and that many people express the urgent need for a feature update for the old v4.

I agree that it is probably a waste of time to bring smaller features like zoomable UI to the old v4. I can live with the way it is right now for a while.

But I want to stress that I think it is absolutely crucial for the survival of the v4 community to make sure it’s not drying out in the transition process. It’s worth to invest a couple of moths into the old v4 to cure the most pressing needs and to keep up with the competition. The people you keep entertained now will bring VL to life with their experience and passion. It will be much harder to build a new community from scratch.

Find a way to increase the power of v4 by offering a simple way of using more of the available hardware resources. Don’t reinvent the VheeL.

I have a question, those super big projects, would be easier to build or better performant in other software ? Unity unreal or Touch designer ? Or will they have the same problems in the end and you will require complex programing (like vl) to make it work ?

I’ve never seen really complex big projects on touch .

Clearly unity and unreal have them (games) but Its usually what those software are made for.

Unity and unreal are object oriented and vvvv not that’s why such overhead happening. That why using vl in conjunction with flow control will give you more perf then pure vvvv solution. On such big projects I would recommend to use some custom plugin set to reduce overhead…

Adding my 2cents I can just repeat @eno remark: “(…) it is absolutely crucial for the survival of the v4 community to make sure it’s not drying out in the transition process.(…) It will be much harder to build a new community from scratch.”

@eno

And that’s why I stressed so much about certain aspects in a few threads about VL. Because I care about all of this, not for the sake of critique.

Thank you all for all the reasonable and well put input. While being silent we are still investigating what is doable and what not. Please be patient. We’ll blog the results. Thank you all!

Directly after our project analysis session in Berlin I went on holiday, not finding the time to write an extensive review of our findings before. I promise to do so when I get back. It’s been an insightful meeting, though!

After all I will try to summarize the findings from our session in Berlin.

To briefly outline the problem again: On our last project, the patch graph consumed 10ms (10k ticks) in idle state, so literally rendering and computing nothing but itself, reducing the available netto computation time for content to about 5-8 ms.

The question was and is, how this can be improved, without creating a hell of evaluates and s+h’s etc, making the patch hard to read and work on.

Spoiler: The sad news is, there isn’t an easy way out. But VL can help.

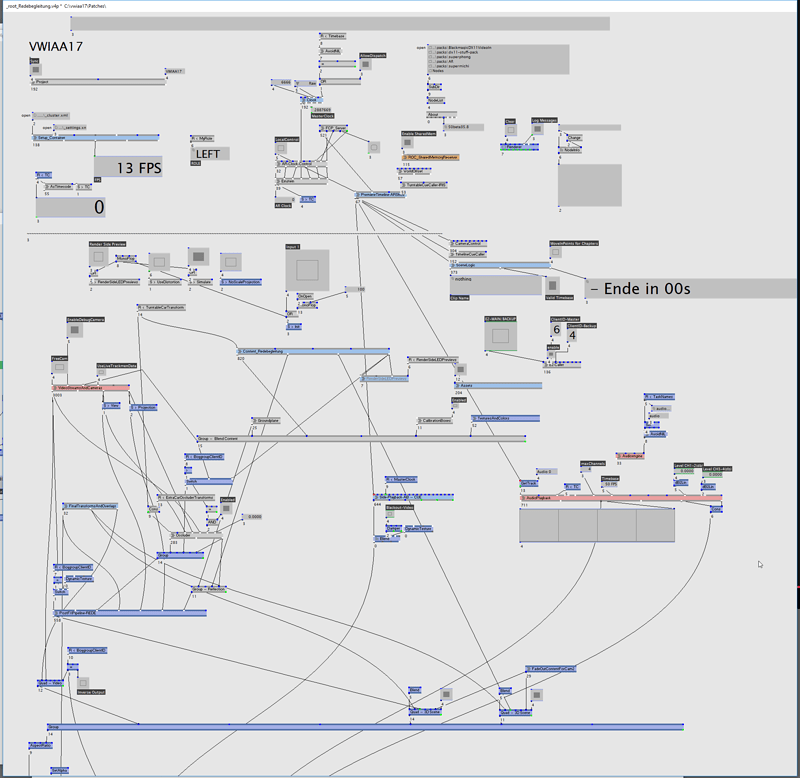

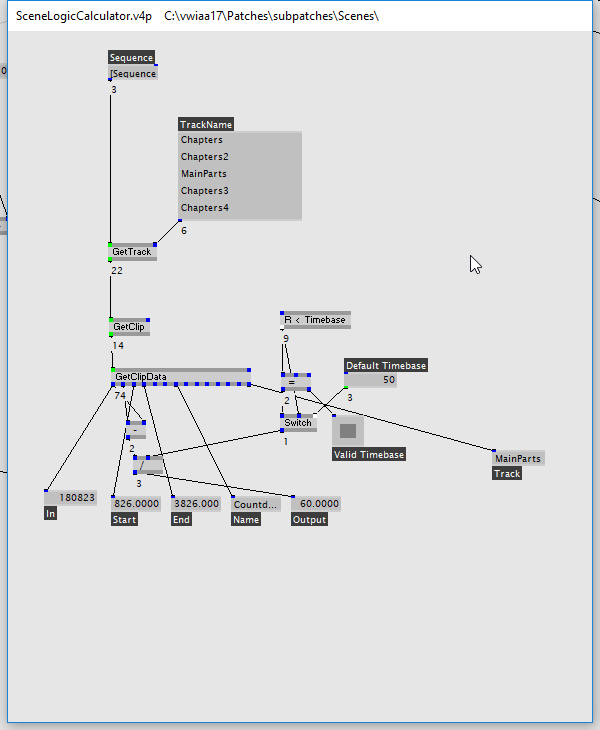

I have to go little bit into detail here. If you look at our root patch:

basically everything above and around the line at the top are just framework patches. Reading settings, switching scenes, computing timelines, computing and verifying time etc. Nothing fancy, but necessary. Also no big slicecounts. Mostly flat logic. And still counting up for 2.5k ticks.

What to do about that?

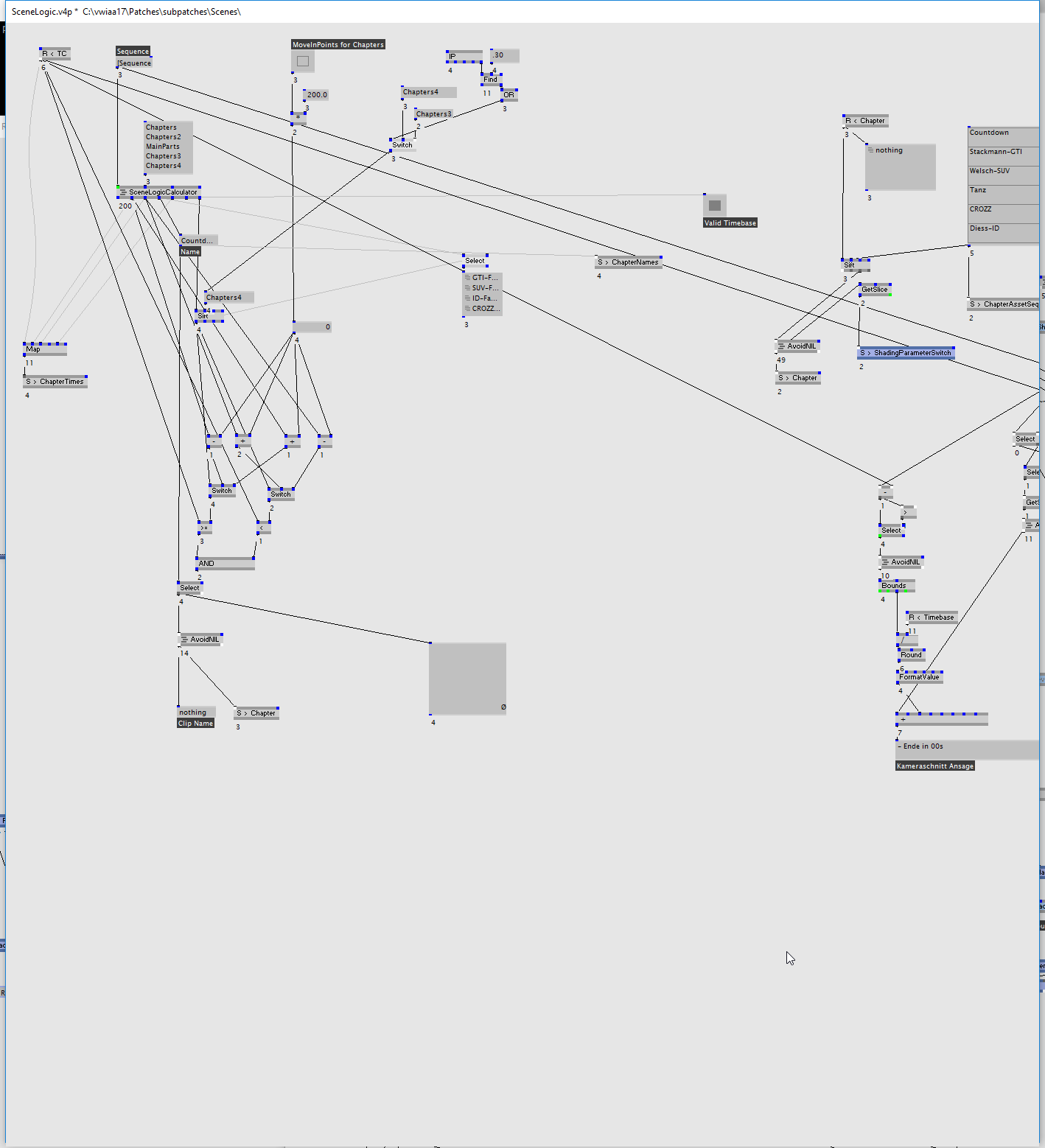

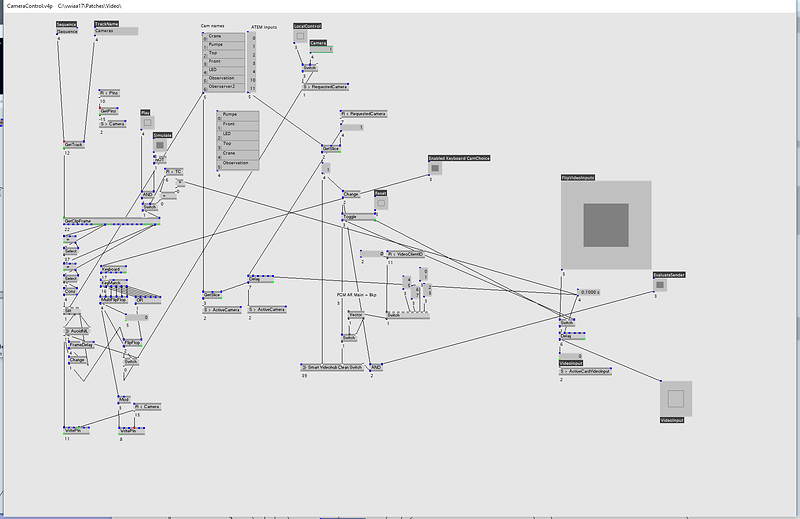

Usually you would say, that you write plugins to get this kind of computation cheaper. Yet, we already have a lot of custom plugins. Look at these boring patches, they don’t do anything special:

What makes these patches still slow?

Normally we don’t write monolithic plugins, that serve a special case, but we write generalized nodesets, so we can use them in a variety of ways. But in order to use them in a specific scenario, we always need certain helper nodes from v4, like"+" “sift”, “select”, “switch”, “getslice”, whatever, in order to feed the plugins the specific data for the specific usecases. So we have to use a lot of v4 nodes extra, to make the plugins usable.

This incurs two kinds of overheads.

One is the overhead each v4 node brings with it, that it needs to compute every frame (even if it only has to check, wether it has to compute), and also that even single values are internally computed as a spread. So every v4 node has a cost. And the other is even more expensive, it’s the overhead from the plugin interface. So the more you break down functionality of your plugins into nodes of smaller, reusable functions, the more often you have to go in and out of pluginspace. This in itself is quite a cost, as I learned and wasn’t so aware of.

This is where VL comes in, and this is also my first big learning from that day in Berlin.

VL is not just another way of writing plugins in the sense of small, abstracted functions. It should allow you (and it does as I know now), to not only write plugins as you did in c# - with the same overhead in continuously crossing in and out of the pluginspace etc - but it makes it very easy to move also the special cases into the plugin space. Just as you do in v4 for this and that specific scenario, you can patch away in VL, and let the VL patch, i.e. the compiled code, do also all the special work, that is needed to - in this case - compute camera switching state, to do scene switching, clock verification etc., without leaving the plugin space. Return only the finished value to v4.

This is something essentially new for me, who is not so super familiar with written code. Plus, for more complex stuff, you don’t need an external IDE, but you can continuously work on and compile your code. Only this ‘on the fly compilation’ makes it really possible to get closer to your specific usecases with compiled code.

To put this into figures. After holiday I went onto porting first patches and functionality into VL.

Our ‘Clock’ patch, with an average of 250 ticks is now down to 40.

A specialized patch for receiving and and parsing tracking data is down to 40, too, from previously over 300.

And I can now see, how this pattern extends to many of the other tasks we have in our projects.

This of course doesn’t apply to all problems, especially not the large-spread-heavy number crunching, where the differences get smaller. But to many of the framework problems of large patches, which was the original question of this thread.

Further up in this thread, I said, a feature update for v4 is necessary, because VL is not yet ready to take over. Understanding VL more, I must say that that is only partly true. VL isn’t ready to do design work. The problems regarding this have been highlighted and are on the agenda. But in other ways it is very ready to take over. And it is also (quite) usable, after two weeks of occasionally investing time in it. It’s still not perfect, and some things are cumbersome, and it also crashes from time to time. But it’s mature enough to open the door to take it into production more and more.

That’s it from me on the VL side of things. I’m hooked now. I can see how it integrates with v4, and how it is superior to it.

But why can’t everybody see that? More on that further down, in an observation about communicating VL.

But before that - what about the other issues of this thread? How can v4 be improved to perform better?

One thing we found is that v4 is actually bad at identifying static branches of the patch graph.

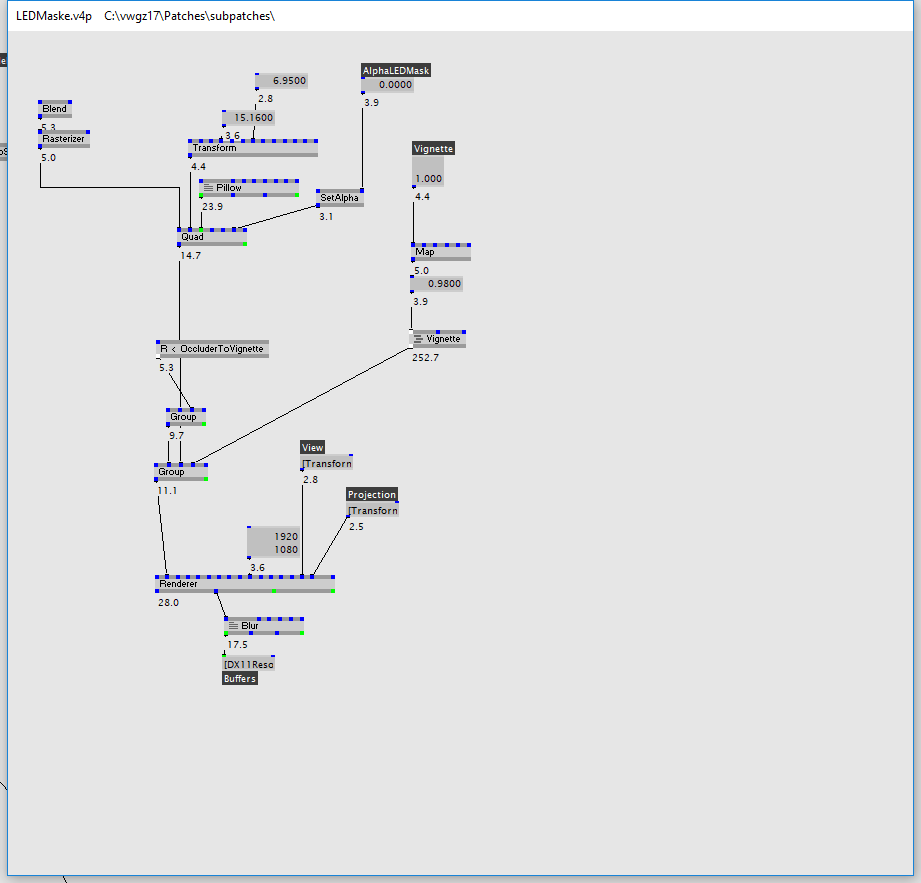

If you look at this patch:

You obviously see the blend, the rasterizer, the transform, the pillow etc. - why do they consume ticks at all? They don’t change. And that should be able to detect from looking at the patch graph alone, that these nodes can’t change, and thus also don’t need to evaluate each frame.

So I hope for better evaluations strategies based on a smarter graph-parsing. This can be implemented and save a lot of cpu.

The other ideas … about the easy way out. About just sending parts of the graph to another thread. About a multithreaded v4 …

What I’ve learned about these ideas leads to the really important question of communication for the development of VL and v4.

After a really long day (2 a.m.), looking at our project, trying to understand VL and taking first steps, Sebastian pulled me over to his desk, saying “hey, I’ve got to show you something”. And what I saw was a prototype of v4 running on multiple evaluator processes. I was baffled.

After that, Elias took over and showed me a v4, where the interface was running in a separate thread. Rendering unimpressed by UI interactions.

We talked for a very long time. And for both of the prototypes there are very good reasons, really severe problems, why they are not a reality yet; and probably also never will be (one more than the other). I won’t take too much away here; I think the devs should explain the details at some point.

But what really struck me is, that behind a facade of … silence? ignorance? of the v4 group there is actually something going on. That the proposals and requirements discussed in this forum are taken seriously, and are being investigated. This is not visible from the ‘outside’, from the user base. And I think this is a crucial thing to understand for the v4 community.

I would identify an elitist split between the v4 users and the devs, which must have happened slowly over the last years.

I remember times where we marveled at the devs, culminating in celebrated keynode presentations on new features and techniques, with a feeling that they were ahead of the time, knowing what would be the right steps to take for the future.

With the focus on the rewriting of v4, known as VL, this confidence faded. Other contributions made the day for the v4 users. And an with an unspoken disappoinment about this emptiness also faded the trust, that the devs know what to do. The trust that the right decisions would be made for ‘us’.

The visit to Berlin changed this perspective for me, it showed me that the spirit is still there. The development is smart and necessary. It just has a communication problem. How to do that better, would be a discussion for a separate thread, I can’t cover that here now in detail.

One thing would be to disclose experiments, like the ones about multithreaded v4, and let the community participate in your argument, in the reasoning why to take this path and not the other one. This strengthens confidence.

The other might be to pick people up at what they know. To show that you can do the same in VL, rather than showin how VL can solve problems that I didn’t have before I knew it.

And last - even though I’m confident again about the development and the steps taken by the v4 group, I still think it would be necessary to broaden the field of development with new people, new forces that focus on specific user requirements and needs, like timeline, render pipeline, asset handling etc. That can happen in parallel, and can probably also be reused in a VL world.

So long. Thanks for reading.

Eno

Crazy post, really interesting. Thank you for taking the time to explain a lot of things.

Thank you Eno, (and devs). That’s very interesting.

thanks, eno, for starting this thread and sharing your experiences. these are really valuable insights from behind the scenes that the ordinary patcher would not get without this discussion.

i totally agree that proper communication is the key for successful relationships over a long time. also, proper communication is a lot of work that takes time (and a strategy - who want’s to publish roadmaps even though you cannot say for sure if you can hold them or not). i guess everyone of us knows how valuable time ressources can be while developing something.

in my opinion VL is not only a new product but also a big research project, in which (as in every research) many unknown paths have to be tried and rejected again and problems have to be solved that most of us probably don’t even have the slightest ideas that they existed. communicating what’s going on at the moment and having a vague roadmap of what will/should happen (or what cannot happen because of whatever issues) would help to avoid the feeling of not knowing where all this will go, resulting in people lose trust. still - i’m totally aware how hard this can be and hey, the devvvvs are actually easier to reach than before (in the matrix).

thanks for all your input and great work!

big up Eno

hei eno, thanks for your elaborate answer and analysis. good to hear that we could get you hooked. i’d be curious to hear your feedback on including [https://discourse.vvvv.org/t/vl-custom-dynamic-buffer/15703|dynamic buffers in vl] in your workflow as i’d suspect using those you can go a great deal further than only doing “business-logic” in vl and also “create content” as you put it in #6 above.

and then also please note that we’ve finally added PinEditors in latest alphas, which addresses one of your concerns regarding vl’s usability.

and then regarding your last post:

this is why we’re putting so much energy at the moment into getting writing nodes and using libraries with vl right to provide a comfortable environment for contributing developers.

now excuse me for picking to going into detail with only a few of the other things said above:

reading concerns like these…

…is understandable because it must be really hard to see from a pure user perspective yet. we know that the convincing demos are not there yet. i wrote a new introduction to vl for vvvv users in the graybook that hopefully clarifies our perspective a bit. as with the same named workshop at node17 it is exactly part of our strategy to pick people up where they are with vvvv and help them understand how they can solve their vvvv problems in vl (and then so much more…). please ask your specific questions!

then regarding:

i’m glad eno found the words that we apparently didn’t yet find

finally, we often get the request for talking more about what we do at the moment and what we’re planning for the future. just to make sure everyone is aware of our devvv blog and monthly recaps where we already try to be quite open about things. i definitely see room for improvement in that regard myself and hope to be able to go about that soon.

i‘m very much interested in the UI thread topic of vvvv. not just because vvvv UI itself does interupt the rendering. i guess UI plugins have the same problem.

i can only guess that changes to the vvvv UI thread might apply to UI plugins as well ? Is this „the“ problem or what prevents a change here ?

This topic was automatically closed 365 days after the last reply. New replies are no longer allowed.