hey bjoern,

yes, it hasn’t been an arbitrary decision, but technically motivated.

It’s been quite challenging technically to design the adaptive node system, and later on, make it possible to even precompile generic adaptive patches into dlls. We were basically ahead of C# with regard to this way of expression. With the 5.0 release, we went one step further and made it possible to instantiate generic types with reflection. All these steps are challenging to realize. But it’s very nice to see that we manage to improve the adaptive system further and further. So, I can imagine that we’ll have adaptive process nodes at some point. Actually, there were already some ideas popping up lately.

On the way, there are also new design patterns popping up on how to use the latest feature: Generic interfaces - #7 by Elias

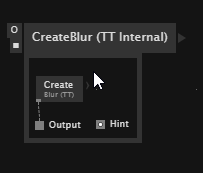

This DynamicModifier node has a very adaptive feel to it already, even though we don’t have adaptive process nodes. The trick is to abstract over the construction of the modifier via the adaptive system. There is CreateModifier (Adaptive)<TModel, T1, T2> and two different implementations for it…

This might be interesting to you, or it might just trick you into some path that doesn’t really work out for you. I just wanted to point to the somehow related work, so that you can evaluate the options as long we don’t have adaptive process nodes.

But actually here is a question: Imagine you have adaptive process nodes and you have a chain

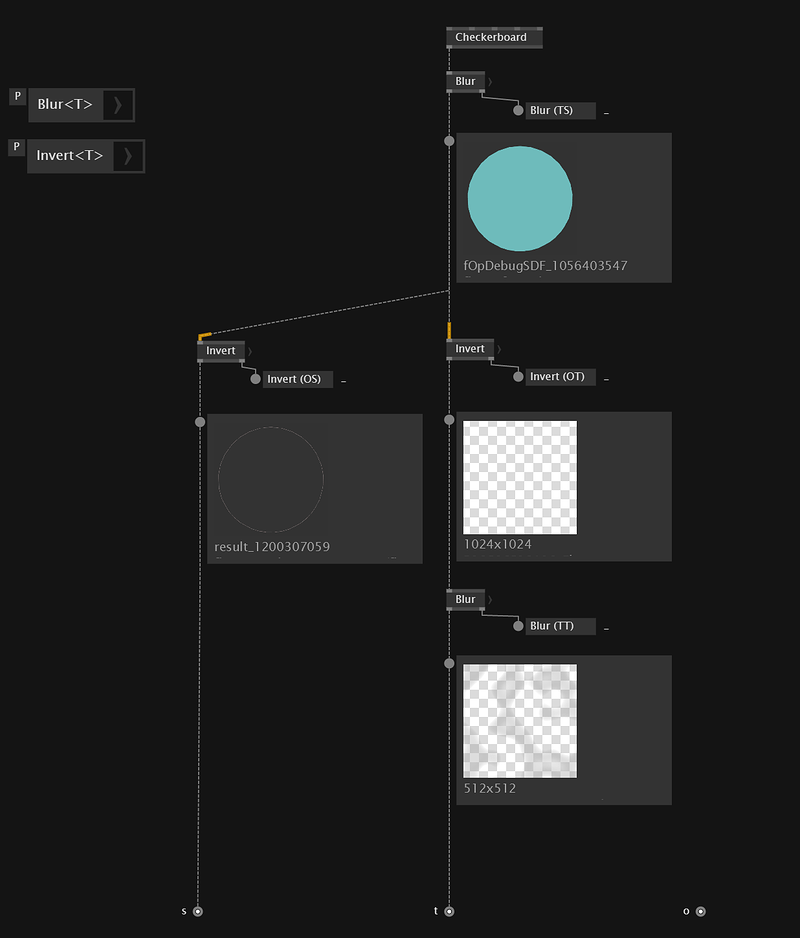

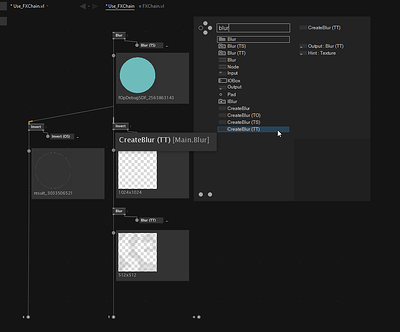

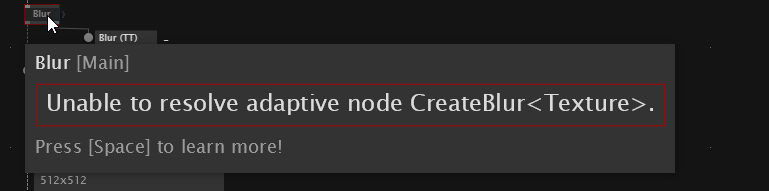

Blur → Invert → Blur

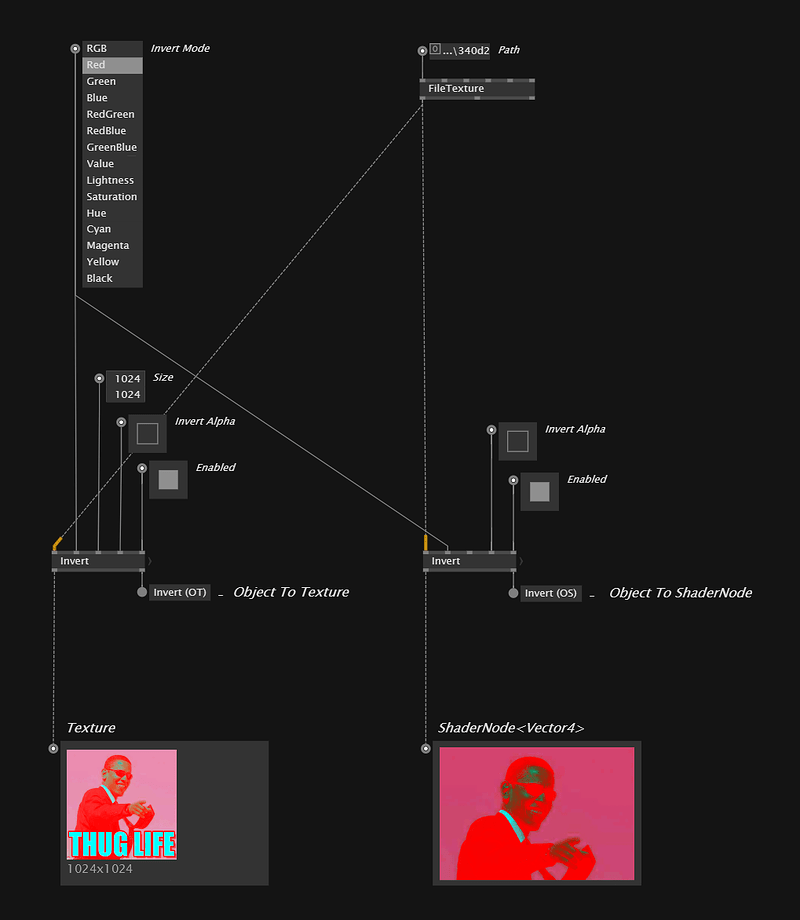

The system would ideally figure out that on the second link, we have only Texture as an option. So only two Invert candidates left: the ones that output a Texture. But how would such a system decide between those two? We would need to tell the system to always favor ShaderNode<Vector4> over Texture. Just for the record: this is yet another feature request. Up to now, the adaptive system would just not be able to decide. You as a user would need to put type annotations in between and suddenly it’s would be getting more complicated when compared to not using the adaptive system at all, leaving it to the user to pick the right version.

I am not sure how would put this feature request into words. But certainly, there is some missing part to make your idea work.

Using TypeSwitch at first sounds like a more realistic option. First of all: You know the candidates. And then also: a TypeSwitch lets you prioritize one option over the other. Using a type switch normally means that you use object and decide at runtime what to do depending on the actual type of the object flowing at runtime. So in the user patch, you’d have object as the compile time datatype in order to communicate with the downstream node.

However, as you pointed out, you also need to be able to communicate upstream. This is where typically the adaptive system is better. The question is if there is a way to combine those systems.

Just thinking out loud: So we’d have object, Texture and ShaderNode<Vector4> as potential candidates at compile time.

Blur -(object)–> Invert -(Texture)–> Blur

Blur could be adaptive: Texture -> T with implementations

Texture → Texture,

Texture → ShaderNode<Vector4>

Texture → object

Its Input is always Texture. You want to communicate this upstream.

Invert could be adaptive object -> T with implementations

object-> Texture,

object-> ShaderNode<Vector4>

object-> object

The first candidate would get picked. As it needs to output Texture.

This should determine the overload for the upstream Blur (Texture → object). The implementation for this one would make use of ShaderNode<Vector4>, since this doesn’t require an extra render pass and the downstream doesn’t seem to care.

Not completely sure, but it sounds like the combination of

- compile-time type inference + adaptive node picking in order to communicate upstream and

- the type switch in order to select the right approach at runtime depending on the input

could somehow work out.

And maybe the “adaptive process nodes” that we used here could be somehow realized by what I mentioned above, but it sounds like a lot of work though. No matter how you put it and no matter which feature we have or not have it’s going to be tough.

So maybe it’s doable right now. But it would be a bit easier if there would be

- adaptive process nodes

- type priorities when in doubt

Let’s digest this.

Last and not least: you of course could also invent your own type which flows over all the links and allows the downstream nodes to talk to the nodes upstream in order to tell them what is acceptable or not.

This would be a classic approach where the library doesn’t heavily depend on the language features but comes up with the (runtime) logic itself.

The only annoyance then typically is the often necessary conversion nodes to enter this world and to leave it again.