hola,

Been fidling with http://www.depthkit.tv/ . its the old rgbd software they made the clouds documentary with.

Its a neat software to calibrate your kinect and slr, film a scene and export in various formats. All these can be done in v4 but it works quite nice, well worth the 100 euro artists license.

Anyway…

Trying to find a way to integrate the available export formats for a realtime V4 situation changing between loads of recorded clips (im in a VR movie type of scenario)

Solution 1 : OBJ and image sequences. This could be ok except a bit of a hassle when dealing with sounds.

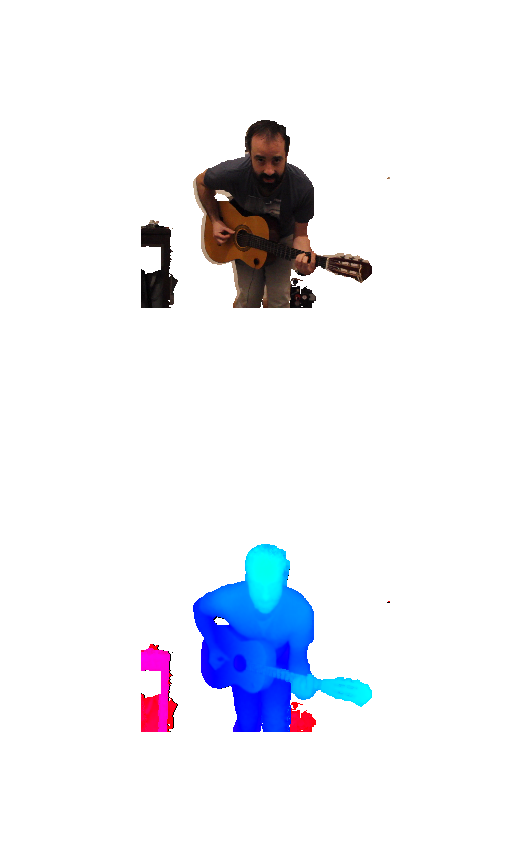

Solution 2 : Depthkit can export a nice little video containing depth and texture data. This is way more optimized discarding a lot of unnecessary stuff. It looks like that. sorry for the pyjama rocker style.

Shaders.zip (4.2 KB)

Shaders.zip (4.2 KB)

It would be great to extract the 3d data from this texture, but i dont have this amount of shader juice in my fridge.

Depthkit includes some shaders doing this job in unity. attached here if anyone understands better whats going on.

Working with that and the HAP player for example would be a great way to load tons of filmed animations, with embedded sounds etc.

Thats all, maybe someone could be interested helping integrate this tool with v4.

cheers