A set of nodes to encode and decode Linear (or Longitudinal) Timecode (LTC) in VL.Audio.

Code borrowed from VVVV.Audio by @tonfilm. Depends on libtc by Robin Gareus and LTCSharp (enclosed) by @elliotwoods.

Initial development and release sponsored by wirmachenbunt.

It’s not a proper nuget yet for issues stated below but it can be used just like one. The thing to keep in mind: If you export your application you’ll have to copy the Ijwhost.dll from VL.Audio.LTC\lib\net6.0-windows or VL.Audio.LTC\runtimes\win-x64\native into the application folder manually.

Issues with C++/CLI

C++/CLI libraries in .NET Core need a shim called Ijwhost.dll for finding and loading the runtime. Currently there seems to be no “official” method on how to deal with this shim when having a C++/CLI library in a NuGet package. Some say to include it in the package other say that this can easily lead to dll hell (what happens when two packages come with different versions of the shim?). Here are some related github issues with further info:

- No Ijwhost.dll when referencing C++/CLR NuGet package from .NET 6 C# app

- How to avoid double writes for ijwhost.dll in NuGet packages?

- C++/CLI libraries may fail to load due to ijwhost.dll not being on the search path

- Ijwhost.dll loading not always working for C++/CLI assembly

- FileNotFoundException in .net 6

Tried the “workaround” of including a manifest file for Ijwhost.dll. But:

- vvvv only finds the shim when located alongside LTCSharp.dll, e.g. both files are in

VL.Audio.LTC\lib\net6.0-windows - when the shim is located in

VL.Audio.LTC\runtimes\win-x64\nativeas suggested by some vvvv doesn’t pick it up - in neither case the shim gets copied to the output when exporting a document referencing VL.Audio.LTC

It’d be nice if the devvvvs could look into this.*

Some Remarks

LTC only supports framerates of 24, 25 and 30 FPS that are tradionally encoutered in the context of film/video. As long as software runs at those framerates all should be fine and dandy, seconds will be split into [0 … 23], [0 … 24], [0 … 29] frames.

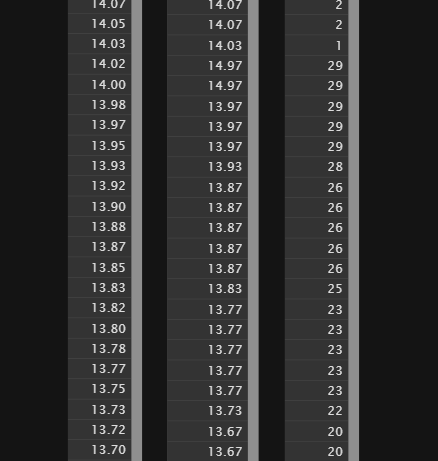

60 (or 50) fps like you’d more likely use in real time media are not supported though. You need to pick a LTC standard that is a divisor of your desired framerate. The count of frames returned will then be multiplied by the ratio of that division. So for a desired Mainloop framerate of 60 FPS you’d pick an LTC standard that uses 30 FPS, the decoder will then ideally return the following frames [0,0,1,1,2,2,3,3 … 29,29] and you need to account for that (see Interpolate on the ToSeconds node).

But things can get finicky. During testing I encountered for example the following [0,0,1,1,1,2,3,3 … 29,29] and @tgd even reported that some frames totally got lost like [0,0,1,1,1,1,3,3 … 29,29]. From what I can tell this is more likely to happen when using WASAPI or ASIO with a audio buffer size >256. Might be hardware and/or driver dependend. So make sure to check the continuity of the incoming frames (by queuing them for example).

TBH if you are working in a professional context and can spare the money using a dedicated timecode reader card might be the better option.

* I wanted to see how it works with other C++/CLI nugets so I tried VST.Net (which was also my cheat sheet for the manifest stuff) but unfortunately vvvv doesn’t seem to like it. I can reference it and it shows up in the Solution Explorer but no classes or methods are accessible. Idk if this is related to my original issue or something different.