Hey there!

I’m trying to optimize my LED mapping toolkit for an installation with quite a lot of points (~20k).

While I have managed to get everything pretty much as light as possible upstream of the almighty Pipet step, I’m trying to enhance performance downstream of Pipet.

With just a few hundred LEDs, I would make operations on the individual R/G/B channels of the color spread out of Pipet using a regular ForEach approach to post-process and prepare all the data for both on-screen previewing and ArtNet output, but now that we have thousands it is struggling quite a bit.

I guess it is time to move this part to buffers (if I remember well, back in the Beta days I was using StructuredBuffers and it was great), but I’m struggling a bit in FUSE land.

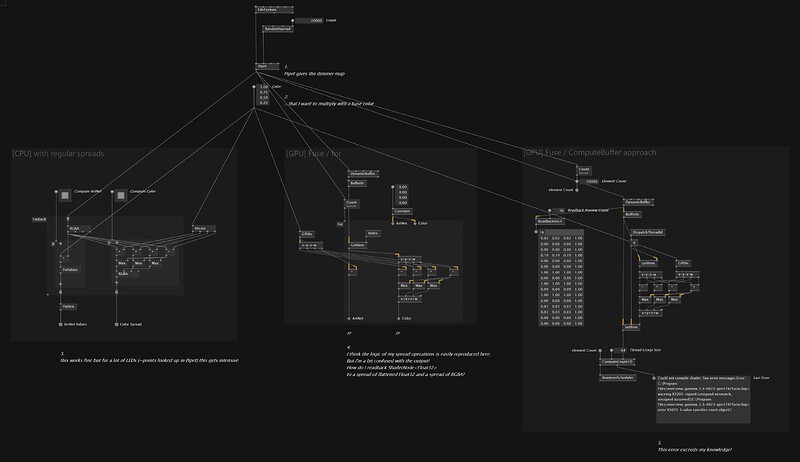

I see 2 different approaches:

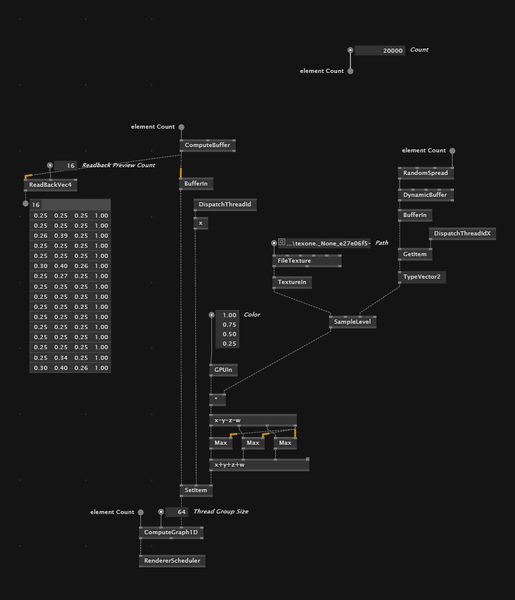

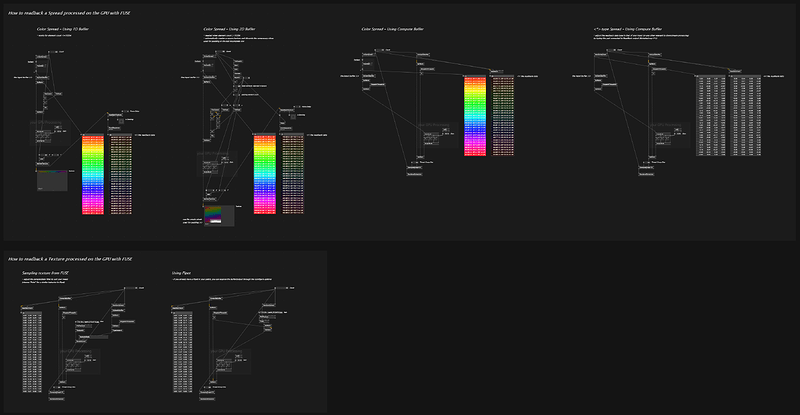

- converting my color spread out of Pipet to a DynamicBuffer, and looping over this buffer in a For (Fuse) region to do my operations in GPU land;

- or rather processing this DynamicBuffer with a ComputeBuffer approach.

First question is: is one of these two approaches more relevant than the other for this context?

Second question being: I’m struggling a bit to get either one working anyway 😅

Attached is a patch of my current WIP with both approaches, with a simplistic pipeline: basically my Pipet looks up a black/white texture that is my LEDs’ dimmer, and I want to multiply it with a color that I want to both use as a color spread for my scene preview (basically sent to an InstancingSpread in Stride), and my ArtNet output (R/G/B/W channels serialized).

- with For: I guess I’m good with translating my operations to GPU but do no see how to get my ShaderNode to regular Spreads?

- with ComputeBuffer: I have a compilation error reported by ComputeGraph about a signed/unsigned mismatch, but do not really understand where that would come from…

Could you give me any pointers to sort this out?

Thanks a lot!

xp-GPU-Buffers_20231225.vl (68.9 KB)