Hello everyone!

This question might be slightly out of place since it’s not strictly a gamma question - apologies for that.

Regarding my problem: I am trying to build a face-swapping application that will take an input image of a person, detect and extract the face in that image. Afterward, the extracted face should be imposed onto the face of another person’s image in a somewhat convincing way (taking into account varying angles and such). Basically, I am following the procedure outlined here: https://learnopencv.com/face-swap-using-opencv-c-python, but I am rebuilding it in vvvv.

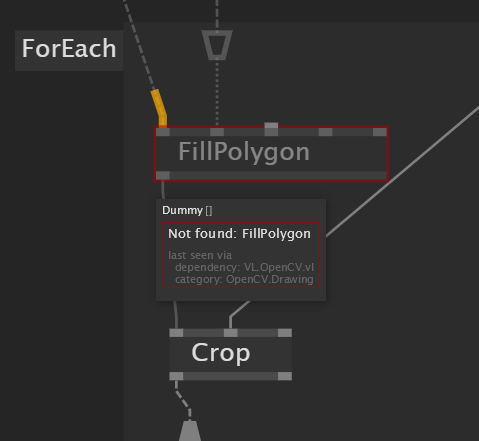

I used the DlibDotNet NuGet package to detect and extract faces using dlib’s 68-landmark feature, which worked fine. Then, I split the face image into various triangles using Delaunay triangulation and the 68 landmark points. Now comes the crucial step: each extracted triangular image from image 1 has a corresponding triangle in image 2, but they are, of course, of different sizes and have different angles. This is where the getAffineTransform method comes in (provided by the OpenCVSharp4 NuGet package), which generates a transformation matrix that can be used to apply an affine transformation onto the triangles from image 1 so they fit the triangles in image 2.

However, the triangles returned after applying the transformation are not as expected.

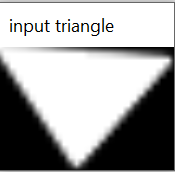

The input looks like this:

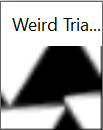

and this is the output i get:

The output is a mirrored image (which can be accredited to the border extrapolation method being mirrored) but i am not that well versed in opencv or linear algebra to understand the underlying issue here.

This video shows a bit better what kind of output I am trying to achieve:

https://youtu.be/4pu54BDXYw8?t=1615

So I was hoping someone here might be able to give me a hint of what might be going on here.

Any help is greatly appreciated!

This is my patch:

https://we.tl/t-fkUgCtzmDK

Edit: I was told @ravazquez might be able to help?