Hello Everybody,

I’m currently working on a kinect installation and I stumbled upon a problem which I thought it was solved since a while… but apparently is not… …or I couldn’t find it :)

What’s the current “state of the art” method for depth repair in our lovvvvely community?

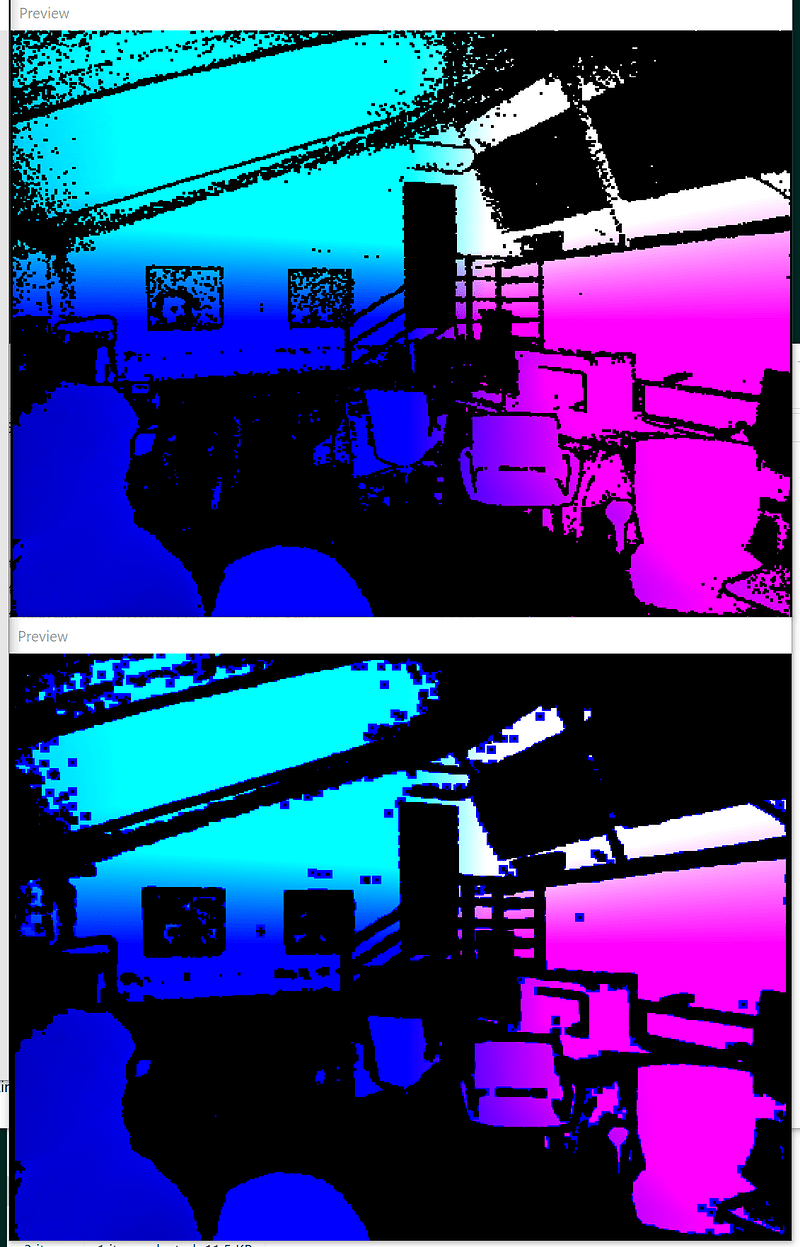

I tried the WorldRepair node from DX11.Particles library but it looks broken:

(Up= Raw depth; Down= processed depth using WorldRepair)

this depth repair algorithm looks good:

https://vvvv.org/contribution/kinect-depth-fill

but incomplete, since you also need to build the worldTexture out of it and to retrieve the color for the filled holes of the depth texture.

In general would be nice to enstablish a sort of high quality standard for z-cameras data handling.

What about a collective development for a vvvv/vl contribution to tackle just this specific task?

I guess it would be the matter of collecting all our shaders-modules at first.

in regards to kinect scenario, it would be nice to have a set of nodes for:

- depth repair (Temporalmethod / Spatialmethod / SpatioTemporalMethod …RGB guided maybe) (internet is full of papers about this, most of them not free tho… damn…)

- world repair

- RGBDepth repair (reconstructing it from repaired depth + ray table?..)

- Basic Pointcloud/Geometry visualizers for debug

It may sound naive, but shall we simply do it?

Cheers

Natan