hi all, hi, fuse team!

I checked the latest fuse release for gamma 5. pretty cool!

And I have some questions and feedback:

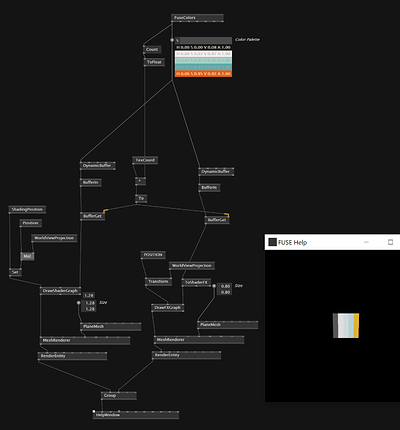

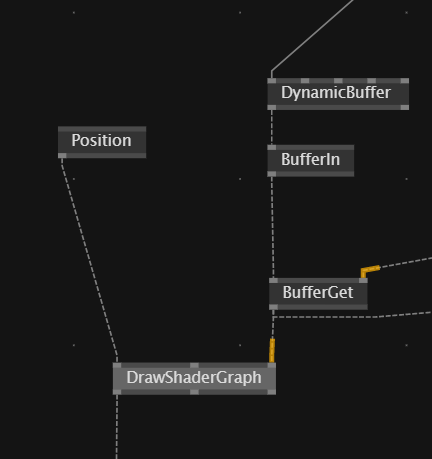

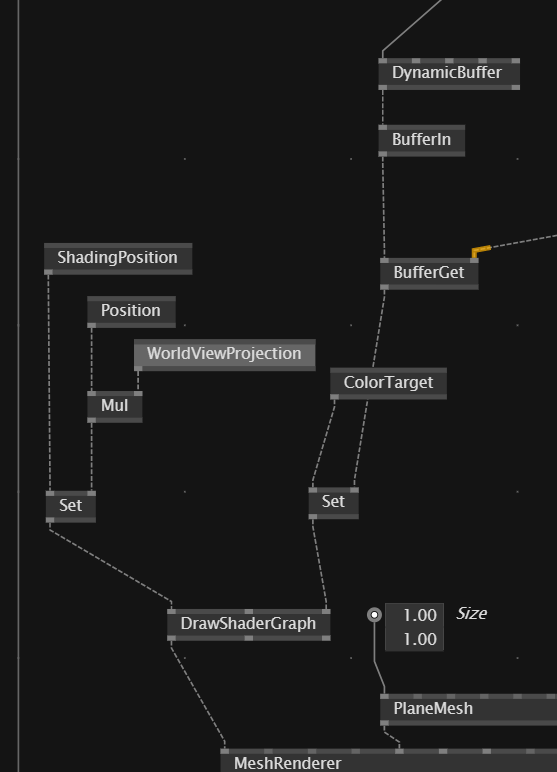

DrawShaderGraph vs DrawFXGraph

I found them confusing because the connect the same way to the mesh renderer, but they take different inputs. DrawFXGraph seems to be the ShaderFX input.

I tried building the first graph using fuse nodes and the second using shaderfx.

Why does it not work with DrawShaderGraph? shaderfxvsshadergraph.vl (30.4 KB)

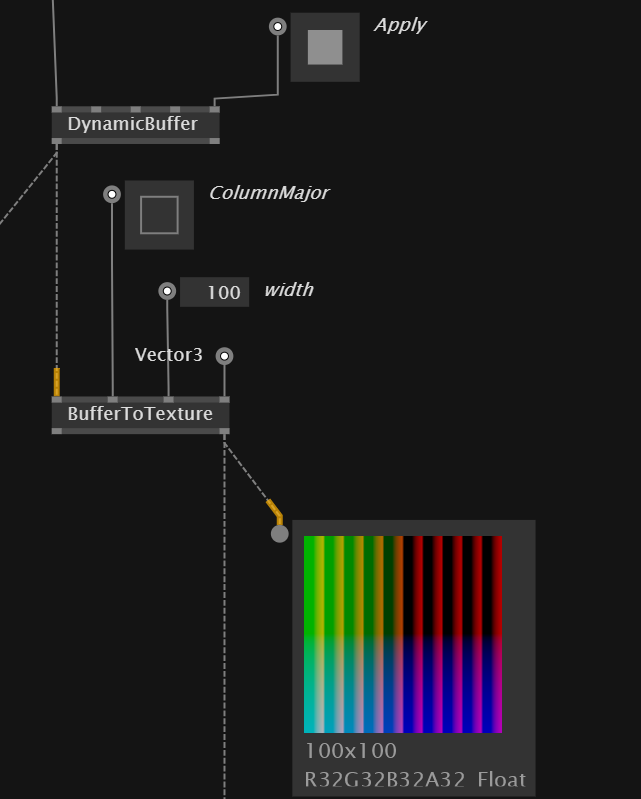

BufferToTexture and TextureToBuffer are super useful for debugging and baking data into textures! Very helpful!

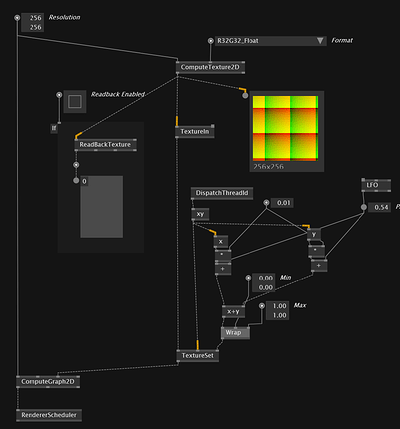

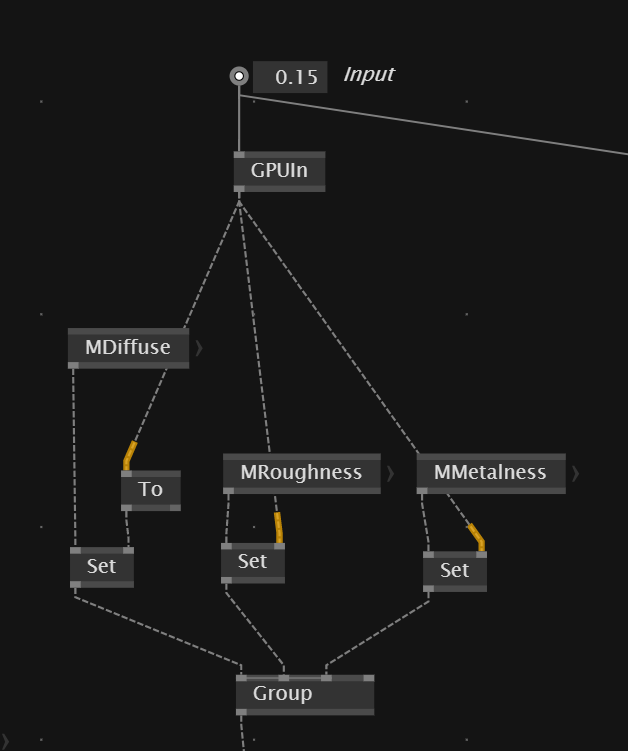

ComputeTexture2D: very easy to set up and to understand. Adaptive nodes work great when changing vector type. The ComputeGraph2D generates the shader code, and RendererScheduler is the actual compute renderer?

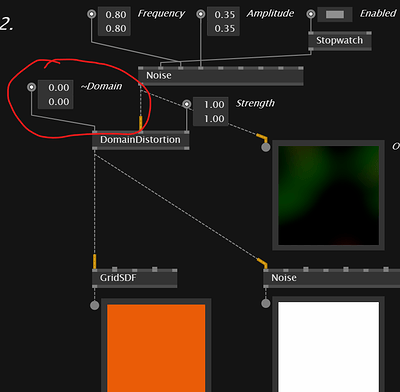

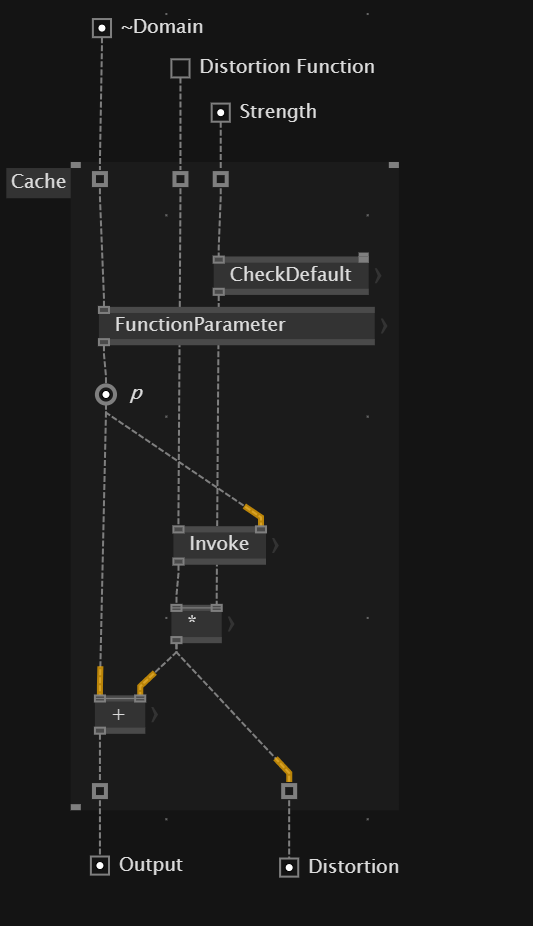

Is domain distortion basically modifying UV sampling coordinates? Why is there a render error when I input vec2 zero as a domain? I understand this as the offset of the original UVs.

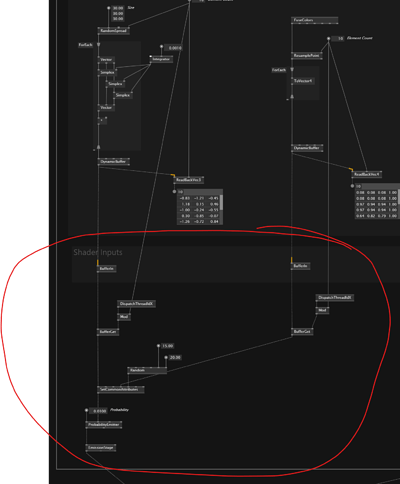

How to use External buffer Data:

So, the first dynamic buffer is cpu-> GPU upload, and from BufferIn, we are in compute world. Did I get this right? If so, the connection “dynamic buffer ->bufferin->bufferget” is pretty hard to get if you don’t know how this works under the hood.

I like everything about the particle system.

AmountEmitterThreaded and the separation between the emission and simulation stages are nicely done! It’s obvious what the elements are doing, and creates an excellent structure.

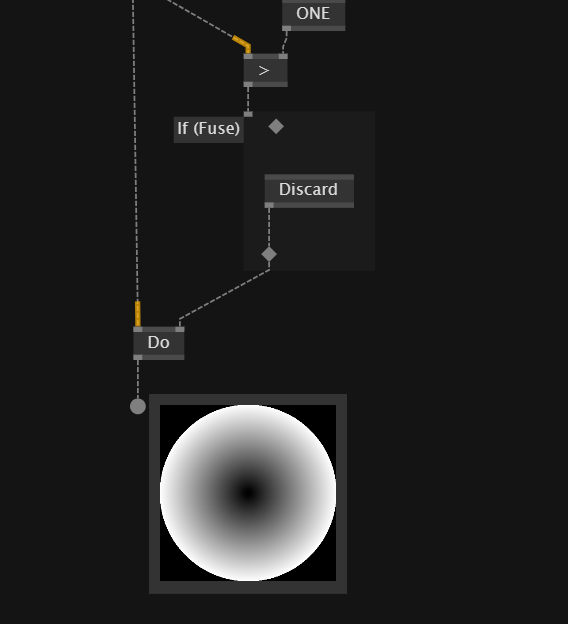

The “discard” patch could tell that this can save performance. Since there is no text, it looks like a clipping function. Is setting an invalid vertex coordinate still a thing to skip the rasterization stage altogether? If so, a node like this would be nice to have.

The geometry stage nodes are also pretty intuitive. PointToBox is still a bit loaded, but I guess a for each loop is coming =)

I am pretty stoked for the next release with gamma 5! Performance is pretty good, and compile time is much better than on the old version I worked with. Thank you for all the work! It shows.